An indepth look into how Vectors operate and how they look in memory.

Vectors are used a lot in C++ as the go-to dynamic size container, but how do they actually work and what do they look like on the stack and heap?

Well, I recently asked a question on a Discord server about the possibility of using a map that would keep its insertion order, and I thought about using a vector with the type std::pair, which within is a std::string to act as the key, and std::vector<std::string> to hold values. However, I was told this would not be a good idea at all due to vectors reallocating once they exceed their capacity, and I decided to look into it as I had only considered vectors in a very surface-level manner.

Here is the code I was asking about:

std::vector<std::pair<std::string, std::vector<std::string>>>C++ Vectors: How do Capacities change?

If you have any knowledge of vectors, you will know they are dynamic in size, and adding (push_back) a value to a vector will increase its size and potentially its capacity. Now, its important to know the difference between the size and the capacity.

std::vector<int> vec { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9 };

std::cout << "Capacity: " << vec.capacity() << '\n';

std::cout << "Size: " << vec.size() << '\n';

std::cout << "--------------\n";

vec.resize(5);

std::cout << "Capacity: " << vec.capacity() << '\n';

std::cout << "Size: " << vec.size() << '\n';Output:

Capacity: 10

Size: 10

--------------

Capacity: 10

Size: 5In the above code, we create a vector with 10 elements, and this also sets the capacity to 10. We then resize the vector to contain 5 elements, however, the space allocated for the vector still stays the same. In simple terms:

- Capacity is the amount of space allocated for the vector elements

- Size is the amount of elements within the vector

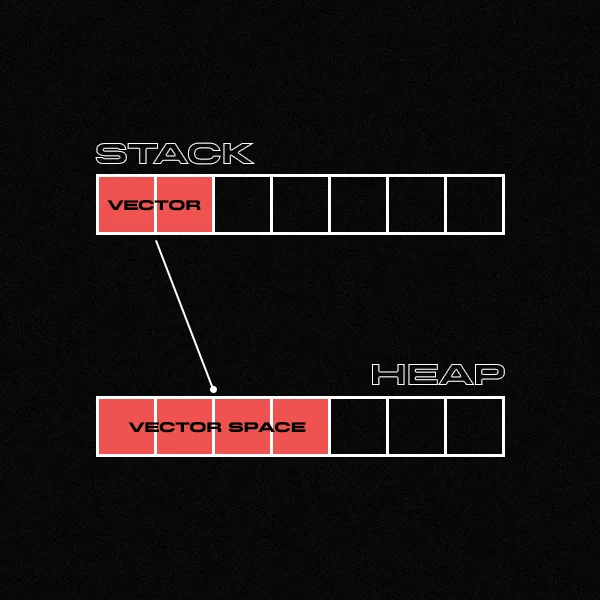

You might not know, but when we create a vector, we allocate space on the heap for each of the elements.

C++ Vectors: What happens when Capacities change?

Now that we know how a standard Vector looks on the Stack/Heap and understand the difference between size and capacity, we can start looking at what happens when we exceed the capacity of the vector. In our above code example we have a capacity of 10, but what if I add another value to the vector?

std::vector<int> vec { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9 };

std::cout << "Capacity: " << vec.capacity() << '\n';

std::cout << "--------------\n";

int x {10};

vec.push_back(x);

std::cout << "New Capacity: " << vec.capacity() << '\n';Output:

Capacity: 10

--------------

New Capacity: 15The above output might not be what you expected; you probably assumed it would be 11, but that’s not the case. The amount the capacity increases by is dependent on the compiler. MSVC will increase the capacity by x1.5 but most implementations will increase it by x2. Now, the question is how does this look on the heap?

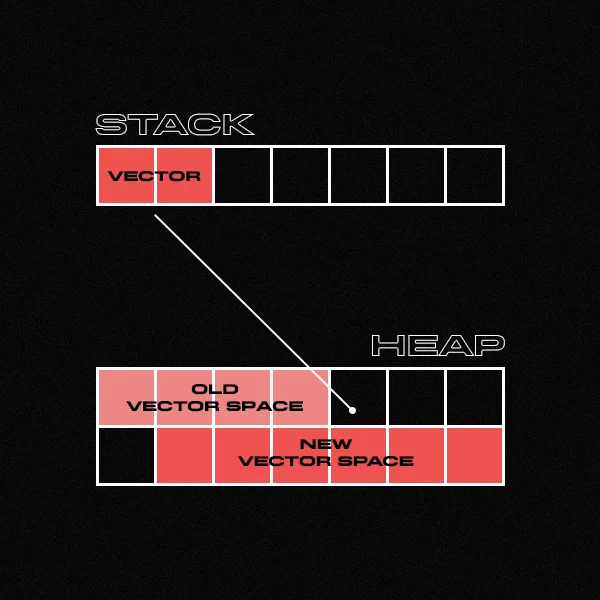

The issue arises when the size exceeds the capacity; the space allocated on the heap for the elements is no longer suitable for the Vector, so it needs more space. You might assume that it will just add more space at the currently allocated space, however, it actually needs to be moved to a different space, deallocating the old space.

So if we had a vector with the following:

- Capacity: 4

- Size: 4

If we then add one value to the vector with the MSVC compiler it will then have the following details:

- Capacity: 6

- Size: 5

C++ Vectors: How to avoid reallocation

If you want to avoid the constant reallocation when inserting a value into a vector when it exceeds its capacity, you should set an initial size for the vector. This is especially useful if you know the vector won’t exceed a certain size.

std::vector<int> vec(100);const int elementSize { 100 };

std::vector<int> vec;

vec.reserve(size);The above code snippets creates a vector with a capacity of 100. Therefore, as long as the capacity isn’t exceeded, no reallocation will need to occur. The difference between them is the first snippet will initialise the memory; the second snippet wont.

Benchmarking

I thought it would be a good idea to demonstrate the difference between initialising the size of the vector, not initialising the vector and reserving the vector.

There are plenty of ways of benchmarking code, but for this example I’m going to use the simple one used in Cherno’s Benchmarking video.

// Initialising the size of the vector

{

Timer time("Initialised size test");

std::vector<int> vec(100000);

for (int i{ 0 }; i < 100000; ++i)

{

vec.push_back(i + 2);

}

}After running this 10 different times it averages 1105.1us (1.1051ms).

// Uninitialised vector

{

Timer time("Unitialised size test");

std::vector<int> vec;

for (int i{ 0 }; i < 100000; ++i)

{

vec.push_back(i + 2);

}

}The above was also ran 10 times and it averaged a run time of 998.4us (0.9984ms), so not much of a difference between the initialised size for the vector.

// Vector size reserved

{

Timer time("Reserved size test");

const int elementSize{ 100000 };

std::vector<int> vec;

vec.reserve(elementSize);

for (int i{ 0 }; i < 100000; ++i)

{

vec.push_back(i + 2);

}

}This is where it gets interesting, after running this 10 times it averaged a run time of 442.2us (0.4422ms) which is over twice as fast as the previous examples!

| Type of Vector Creation | Average Runtime |

| Initialised size of the vector | 1105.1us (1.1051ms) |

| Unitialised vector | 998.4us (0.9984ms) |

| Reserved vector | 442.2us (0.4422ms) |

Resources

If you enjoy delving into things then I recommend having a read of my blog post, How Does IAT Hooking Work, and What Are Its Issues?

Leave a Reply